GOI: Find 3D Gaussians of Interest with an Optimizable Open-vocabulary Semantic-space Hyperplane

Ministry of Education of China, Xiamen University, Fujian, China

Semantic segmentation result of GOI. The left video shows the rendered 3D scene, and the right video shows the semantic segmentation result.

TL;DR

Abstract

3D open-vocabulary scene understanding, crucial for advancing augmented reality and robotic applications, involves interpreting and locating specific regions within a 3D space as directed by natural language instructions. To this end, we introduce GOI, a framework that integrates semantic features from 2D vision-language foundation models into 3D Gaussian Splatting (3DGS) and identifies 3D Gaussians of Interest using an Optimizable Semantic-space Hyperplane. Our approach includes an efficient compression method that utilizes scene priors to condense noisy high-dimensional semantic features into compact low-dimensional vectors, which are subsequently embedded in 3DGS. During the open-vocabulary querying process, we adopt a distinct approach compared to existing methods, which depend on a manually set fixed empirical threshold to select regions based on their semantic feature distance to the query text embedding. This traditional approach often lacks universal accuracy, leading to challenges in precisely identifying specific target areas. Instead, our method treats the feature selection process as a hyperplane division within the feature space, retaining only those features that are highly relevant to the query. We leverage off-the-shelf 2D Referring Expression Segmentation (RES) models to fine-tune the semantic-space hyperplane, enabling a more precise distinction between target regions and others. This fine-tuning substantially improves the accuracy of open-vocabulary queries, ensuring the precise localization of pertinent 3D Gaussians. Extensive experiments demonstrate GOI's superiority over previous state-of-the-art methods.

Pipeline

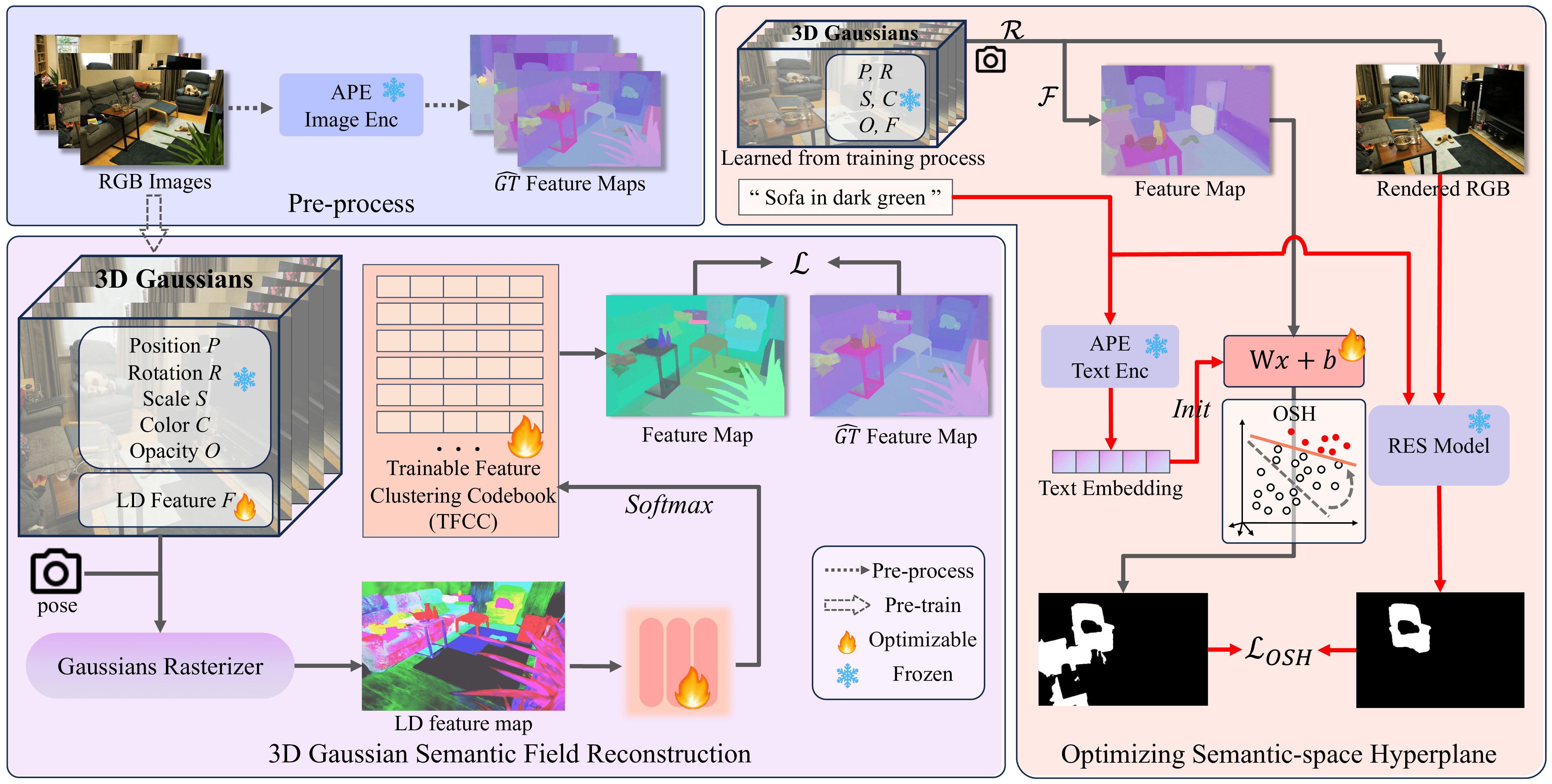

The framework of our GOI. Top left: Reconstruction of a 3D Gaussian scene, encoding multi-view images.

Bottom left: The optimization process. For each training view, a low-dimensional (LD) feature map is rendered through Gaussian Rasterizer

and transformed into a predicted feature map via the Trainable Feature Clustering Codebook (TFCC).

Right: The pipeline illustrates open-vocabulary querying.

The processes denoted by  and

and  correspond to rendering and feature map prediction, respectively.

The red line indicates operations exclusive to the initial query with a new text prompt.

During these operations, the Optimizable Semantic-space Hyperplane (OSH) is fine-tuned to more precisely delineate the target region.

correspond to rendering and feature map prediction, respectively.

The red line indicates operations exclusive to the initial query with a new text prompt.

During these operations, the Optimizable Semantic-space Hyperplane (OSH) is fine-tuned to more precisely delineate the target region.

Video Comparison

Below shows a comparison between our work and other recent language embedded NeRF or 3DGS scenes.

prompt: "sofa in dark green"

prompt: "flowerpot on the table"

prompt: "green grass"

prompt: "the tablemat next to the red gloves"

More Results

|

prompt: "table under the bowl" |

prompt: "Lego bulldozer" |

|

prompt: "cookies" |

prompt: "red apple" |

|

prompt: "stool" |

prompt: "pillow" |

|

prompt: "chair" |

prompt: "the shelf under a toy" |

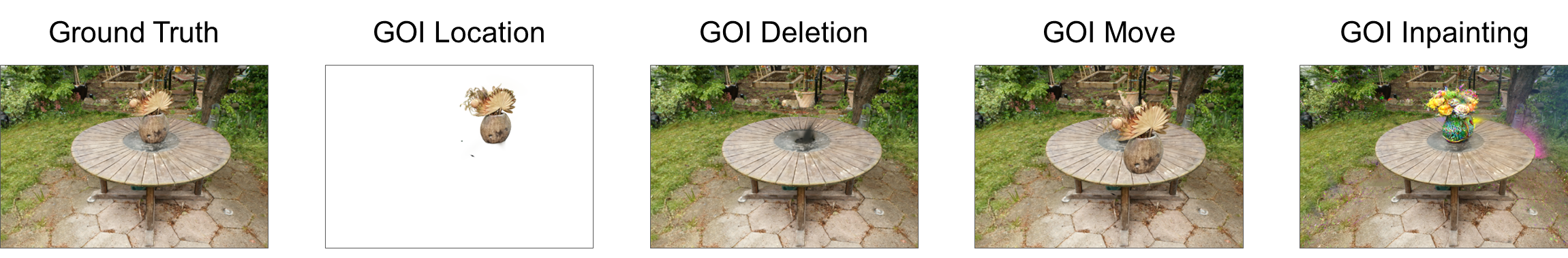

Scene Editing

Our method can be applied to a variety of downstream tasks, with the most direct application being the editing of 3D scenes.

prompt: "flowerpot on the table"

Citation

If you want to cite our work, please use:

@article{goi2024,

title={GOI: Find 3D Gaussians of Interest with an Optimizable Open-vocabulary Semantic-space Hyperplane},

author={Qu, Yansong and Dai, Shaohui and Li, Xinyang and Lin, Jianghang and Cao, Liujuan and Zhang, Shengchuan and Ji, Rongrong},

journal={arXiv preprint arXiv:2405.17596},

year={2024}

}

Acknowledgements

The website template was borrowed from Michaël Gharbi and MipNeRF360.